A Retrospective on System Zero

Just over nine years ago, I published a blog post “System Zero: What Kind of AI have we Created?”. Nine years later, the ideas in the post seem remarkably relevant.1

The idea of System Zero is that the machine’s access to information is so much greater than ours that it can manipulate us in ways that exploit our cognitive foibles, interacting directly with our reflexive selves and undermining our reflective selves.

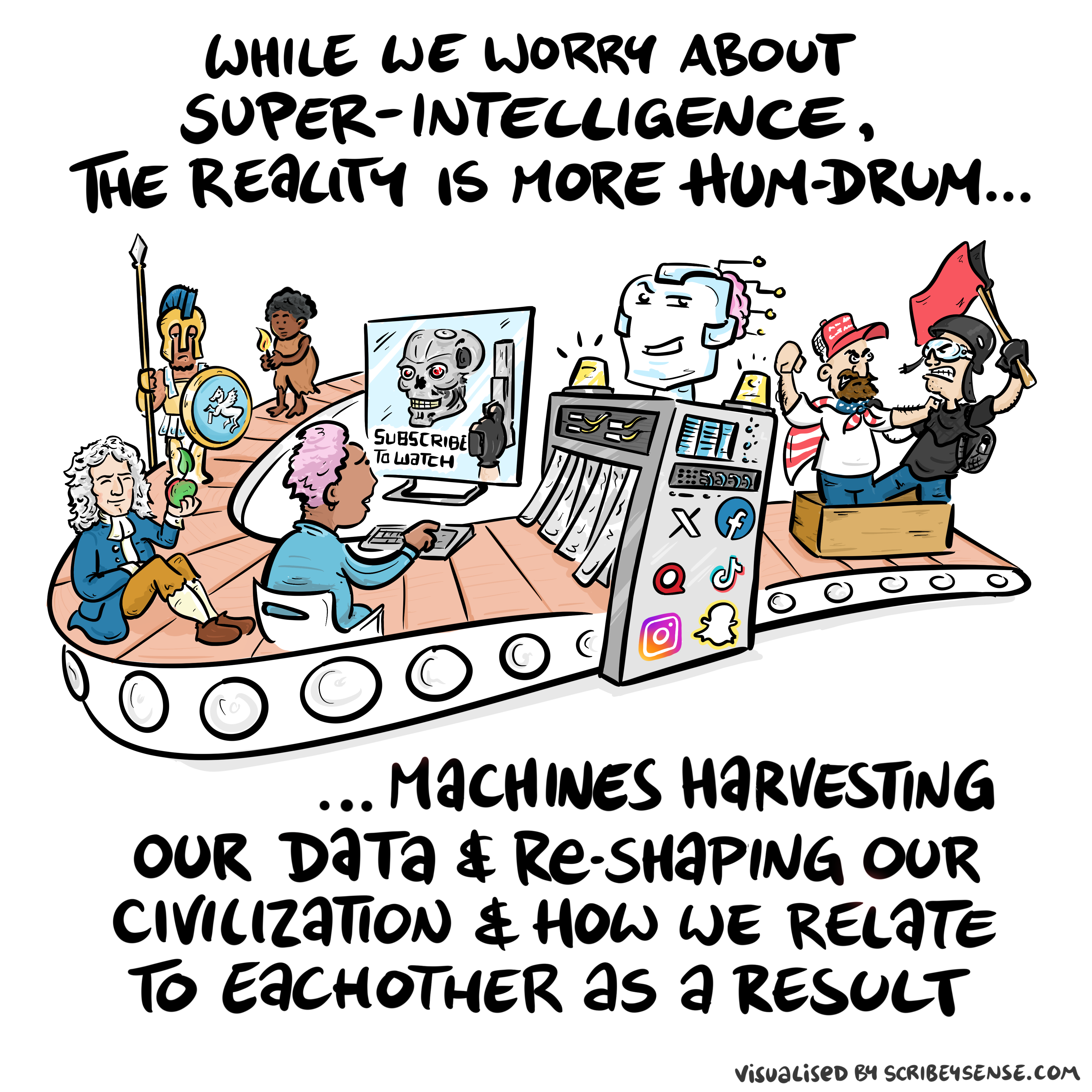

Dan Andrews' illustration for Chapter 8, System Zero. See scribeysense.com

Dan Andrews' illustration for Chapter 8, System Zero. See scribeysense.com

The original post was written in December 2015, before the UK Brexit vote and before the 2016 US election and the Cambridge Analytica scandal. It ended by suggesting what we should truly fear was not sentient AI but a regress into a state where we all start clubbing each other. Dan’s illustration for Chapter 8 also visualises the regress to this state.

The System Zero we experienced fro 2015 to today has been problematic because it undermines diversity of thought. It appeals to us by providing the cognitive equivalent of high-fructose corn syrup. The models that serve this content simplify and stereotype us, rendering us as digital charicatures, but these charicatures then spill over into the real world.

As a retrospective on System Zero I asked Claude 3.5 to review the original post and summarise whether it’s stood the test of time. Click on the machine commentary below to reveal the summary.

One thing that I did not predict in 2015 was the emergence of large language models and their astonishing capabilities. These models bring new challenges, they also bring great possibilities because they do not charicature us in the same simplistic way as earlier machine learning models. They capture the nuances of our culture sufficiently for us to communicate with machines in natural language. But they still do so on the back of our data.

-

So relevant that when searching for my blog post I see an article was published in a paper on the idea in October 2024! Some of the concepts I place in the Human Analogue Machines appear in their System 0. And they also do more theoretical analysis. ↩

-

This is definitely a shift, while I think dual process models of cognition are useful, they seem like a very coarse model. The book speculates that there is a spectrum of responses (maybe we could think of system 1.2 … system 1.4) and that the driving factor is external in terms of the need for response on a given time frame. I think there’s an observer bias associated with the System 2 end of the spectrum, it comes because by definition we spend all our time with our “slow reacting self”.</div>

-

The machine summaries keep characterising the blog post as suggesting the solution is that we withold personal data, but that’s presented as a straw man solution in the post and its problems are discussed.</div>

-

I’m not sure I agree with this. I wouldn’t say it’s alarmist, I think its predictions panned out in practice. The post certainly has more emphasis on the risk because it’s contrasting they hyper around sentient AI and existential risk with the real challenges we face. But it explicitly highlights the possible benefits. I’ve noticed this pattern in summaries both from ChatGPT and Claude. If a point is made but some conditioning is provided later then it ignores the conditioning. It’s as if it gives the material only a cursory read. But that’s probably a fair reflection of what humans do too (similar comments apply to the witholding data misreading too).</div>

Click to see what the machine says about the reflection and the book

Commentary by Machine

NL: This post was published on 2015/12/04

How does it stand the test of time?

Claude: This 2015 article by Neil Lawrence shows remarkable foresight about AI development and its societal implications. Let me analyze how its key points have held up:

Focus on “System Zero” Rather Than AGI The article’s main insight - that we should worry less about sentient AI and more about non-sentient systems that can manipulate human behavior - has proven prescient. While debates about AGI and consciousness continue today, the immediate challenges have indeed come from recommendation systems, targeted advertising, and other algorithms that interface with our “System One” thinking.

Data Privacy Concerns Lawrence’s warnings about personal data and privacy have become even more relevant. The article predicted that AI systems would understand us better than our friends through data collection - something that has been demonstrated through social media algorithms, recommendation systems, and targeted advertising.

Implicit Bias in AI The article’s discussion of how AI systems can perpetuate societal biases has become a central concern in AI ethics. The example of racial bias in decision-making systems was ahead of its time - this has become a major focus in AI development and deployment, with numerous cases of algorithmic bias being discovered in various domains.

Trust and Data Control The proposed solution - giving individuals control over their personal data and building systems that respect privacy - remains relevant but largely unimplemented. Despite regulations like GDPR, we’re still grappling with questions of data ownership and control.

Manipulation Concerns The article’s warning about subtle manipulation of human behavior through AI has proven accurate, as seen in:

Looking back, the article was remarkably accurate in identifying key challenges we face today. Rather than focusing on sci-fi scenarios of superintelligent AI, it correctly identified the more immediate risks of systems that exploit human psychology through data collection and targeted manipulation. However, while many of these problems have become widely recognized, we’re still struggling to implement effective solutions to protect individual privacy and agency.

The concept of “System Zero” - AI systems that bypass our rational thinking to influence our behavior - remains a useful framework for understanding current challenges in AI ethics and governance.

Would you like me to elaborate on any of these points or discuss specific predictions that have or haven’t come to pass?

NL: Yes please, can you discuss predictions which have or haven’t come to pass?

Claude: Let me break down the specific predictions and their outcomes:

Predictions That Have Come True:

Predictions That Haven’t Fully Materialized:

Mixed Results:

The article’s core insight - that the immediate danger of AI lies not in consciousness but in its ability to influence human behavior through data - has proven remarkably accurate. However, its implied solutions around individual data control and trust-based systems remain more aspirational than realized. Would you like me to elaborate on any of these points or explore other aspects of the article’s predictions?

Second conversation with version of Claude that has access to chapter summaries and index from the book as well as the discussion above and the original post.

NL: Here’s a discussion of a blog post from Neil Lawrence from 2015-12-04.

How does the book go beyond the blog post? How has Lawrence’s thinking evolved? Does he cover the gaps identified in the discussion?

Claude: Based on comparing the 2015 blog post to “The Atomic Human”, here’s how Lawrence’s thinking has evolved and expanded:

Key Expansions:

Gaps Addressed:

The book represents a more mature and nuanced understanding of AI’s relationship with society, moving from warning about risks to proposing constructive solutions while maintaining the core insight about AI’s ability to influence human behavior through “System Zero”.